Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Enhancing Crop Classification in Smart Agriculture Using Multiclass Deep Learning Models

Authors: Jagdip Singh, S. Muthukumar, N Jagadeesh, D. Bhadru, V. Manjula, K. Shanthi, V. Mallikarjuna Naik, C. Usha Nandhini

DOI Link: https://doi.org/10.22214/ijraset.2024.65630

Certificate: View Certificate

Abstract

Smart agriculture is increasingly recognized as a vital approach to addressing the challenges of modern farming, including the need for improved yield prediction and efficient resource management. A cornerstone of this innovation is the precise classification of crops, which directly impacts decision-making and resource allocation. In this study, we investigate the effectiveness of several state-of-the-art deep learning models—VGGNet, Sequential, Artificial Neural Network (ANN), and ResNet50—for multiclass crop classification. A diverse and extensive crop image dataset was employed to ensure a comprehensive evaluation. The methodology incorporates rigorous preprocessing steps to enhance data quality, followed by meticulous model training and validation to achieve reliable outcomes. Comparative analysis of these models reveals their relative strengths and limitations, providing insights into their applicability in different agricultural scenarios. The findings emphasize the transformative potential of deep learning in agriculture, enabling precise crop identification and early detection of health issues, thus supporting smarter, more sustainable farming practices. This work lays a foundation for integrating advanced AI solutions into agriculture, paving the way for increased efficiency and resilience in crop management systems.

Introduction

I. INTRODUCTION

Agriculture is the backbone of many economies, providing essential resources and sustaining livelihoods. As global demands on agricultural productivity increase, integrating technological advancements becomes critical for enhancing crop management and output. Traditional crop classification methods, which rely heavily on manual observation and conventional algorithms, are often labor-intensive, time-consuming, and prone to inaccuracies. Deep learning, with its robust image processing capabilities, has emerged as a transformative tool for efficiently and accurately classifying crop images, revolutionizing the field of smart agriculture.

Recent studies have demonstrated significant advancements in this domain. For instance, the work in [1] introduces a hybrid model combining Convolutional Neural Networks (CNNs) with Long Short-Term Memory (LSTM) networks for weed detection and classification, highlighting its efficiency in precision agriculture. Similarly, [2] explores vision-based adaptive variable rate spraying using unmanned aerial vehicles (UAVs), optimizing pesticide and fertilizer application in agricultural fields. Moreover, [3] presents a novel application of the EfficientNetB4 deep learning model for classifying chili leaf diseases, enhancing precision in multiclass disease identification. A notable contribution in [4] discusses the integration of autonomous operation and color-based vision systems in agricultural robotics for precise pesticide spraying, emphasizing its utility in modern farming.

Convolutional Neural Networks, such as AlexNet, VGGNet, and ResNet, have established themselves as cornerstones for image classification due to their ability to capture spatial hierarchies in data [5][6].

Research in [7] demonstrated their efficacy in distinguishing crop types using remote sensing imagery, while transfer learning approaches, as highlighted in [8][9], leverage pre-trained models like InceptionV3 and DenseNet to improve crop classification tasks with limited datasets. Furthermore, integrating temporal data with spatial information using models like RNNs and LSTMs has shown promise in analyzing sequential agricultural data [11].

This study investigates the performance of four deep learning models—VGGNet19, Sequential, Artificial Neural Network (ANN), and ResNet50—for multiclass crop classification. These models are evaluated using a diverse crop image dataset with extensive preprocessing, training, and validation to ensure robustness. The primary objective is to develop a real-time classification system capable of accurately identifying crop types, thereby enhancing decision-making, resource allocation, and crop management. This work aims to contribute to sustainable agricultural practices and drive innovation in smart agriculture through advanced AI methodologies. Hybrid deep learning models have demonstrated superior accuracy in detecting sensitive outcomes, as validated in the research conducted by [12]. P. M. Manjunath et al. [13] introduced a model emphasizing the critical role of IoT in enhancing artificial intelligence applications within agriculture. The integration of machine learning and deep learning algorithms significantly enhanced the performance of our proposed systems, as discussed in [14].

II. METHODOLOGY

This study focuses on developing and evaluating deep learning models for accurate crop classification. The process begins with collecting and preparing an extensive dataset of crop images, which includes three distinct crop classes. These images are sourced from diverse farming environments to ensure variability and robustness in the dataset.

A. Data Collection and Preprocessing

The dataset underwent multiple preprocessing steps to standardize and enhance its quality, enabling the models to perform optimally.

These preprocessing steps included:

- Standardization: Pixel values of all images were normalized to ensure uniformity across the dataset, reducing the influence of lighting variations and inconsistencies.

- Resizing: Images were resized to a consistent dimension, ensuring compatibility with the input size requirements of the models.

- Data Augmentation: To increase data diversity and mitigate overfitting, various transformations such as flips, rotations, shifts, and brightness adjustments were applied. These augmentations simulated real-world variations and improved the generalization capability of the models.

B. Selection of Deep Learning Models

Four deep learning architectures were selected for this study based on their established effectiveness in image classification tasks:

1) VGGNet (VGG16)

- The 16-layer VGG16 architecture was chosen for its simplicity and consistent performance in image recognition.

- Its sequential design, featuring convolutional and pooling layers, facilitates hierarchical feature extraction, making it suitable for crop classification tasks.

2) Sequential Model

- This custom-built model comprises convolutional and pooling layers organized sequentially to capture spatial hierarchies in crop images.

- The architecture was designed specifically to handle the complexities of agricultural datasets, focusing on extracting meaningful patterns.

3) Artificial Neural Network (ANN)

- The ANN model serves as a baseline for comparison.

- Its feedforward design is simpler compared to other models, offering a straightforward approach for initial benchmarking.

4) ResNet50

- ResNet50, a 50-layer deep neural network, incorporates residual connections to address the vanishing gradient problem, enabling deeper architectures without degradation in performance.

- This model is particularly well-suited for handling complex image classification tasks where deeper layers are beneficial.

C. Transfer Learning and Fine-Tuning

The selected models were pre-trained on the ImageNet dataset, a large-scale benchmark dataset, to leverage their learned features and accelerate training. This transfer learning approach allowed the models to adapt quickly to the unique features of the crop image dataset. Fine-tuning involved training the models on the new dataset while preserving the pre-trained weights of earlier layers to prevent overfitting.

D. Hyperparameter Optimization

To ensure optimal performance, key hyperparameters such as learning rate, batch size, and dropout rates were carefully tuned:

- Learning Rate: Adjusted dynamically using learning rate scheduling to enhance training efficiency.

- Batch Size: Optimized to balance training speed and memory usage.

- Dropout: Applied to prevent overfitting by randomly deactivating neurons during training.

- Early Stopping: Used to halt training once validation performance ceased improving, reducing unnecessary computation.

E. Training and Validation

Each model was trained on the pre-processed dataset using a supervised learning approach. The dataset was split into training, validation, and testing subsets to evaluate the models' generalization capabilities. During training:

- Backpropagation was employed to minimize error by adjusting model weights.

- Optimization Algorithms such as Adam were used to ensure efficient convergence.

F. Performance Metrics

To comprehensively evaluate the models, several metrics were calculated, including:

- Accuracy: Measures the overall correctness of the model's predictions.

- Precision: Indicates the proportion of true positive predictions among all positive predictions.

- Recall: Reflects the model's ability to identify all relevant instances within the dataset.

- F1-Score: Provides a harmonic mean of precision and recall, offering a balanced metric for imbalanced datasets.

G. Comparative Analysis

The models’ performance metrics were analyzed to identify their strengths and limitations. This comparison provided valuable insights into which architecture is best suited for specific agricultural applications. The analysis also highlighted areas for future improvements, paving the way for integrating advanced deep learning techniques into smart agriculture. By employing these robust methodologies, the study aimed to build a reliable framework for crop classification, demonstrating the potential of deep learning in transforming agricultural practices. This comprehensive approach ensures the results are both scientifically valid and practically relevant for real-world applications.

This study begins with assembling an extensive dataset of crop images, encompassing three distinct crop classes. The dataset undergoes various preprocessing steps to enhance its quality and improve model performance. These steps include standardizing pixel values, resizing images to a uniform size, and applying transformations such as flips, shifts, and rotations to increase data diversity and robustness.

Four deep learning models—VGGNet, Sequential, ANN, and ResNet50—were chosen for evaluation due to their proven success in complex image classification tasks.

- VGGNet: Known for its simplicity and depth, the 16-layer VGG16 architecture was employed for its reliable accuracy in image recognition.

- Sequential Model: Constructed with multiple convolutional and pooling layers, this model captures hierarchical features effectively.

- Artificial Neural Network (ANN): A benchmark for comparison, the ANN model utilized a straightforward feedforward design.

- ResNet50: With 50 layers and residual connections, ResNet50 addresses the vanishing gradient problem, allowing for deeper architectures and superior performance.

The models were pre-trained on the ImageNet dataset to benefit from transfer learning, followed by fine-tuning on the crop image dataset. Hyperparameters such as learning rate, batch size, and dropout rates were optimized to prevent overfitting and enhance training efficiency. Techniques like early stopping and learning rate scheduling were also applied during training.

Performance metrics, including accuracy, precision, recall, and F1-score, were used to evaluate the models. This comparative analysis provided insights into the strengths and limitations of each model, guiding further research and practical applications in smart agriculture.

III. RESULTS AND DISCUSSION

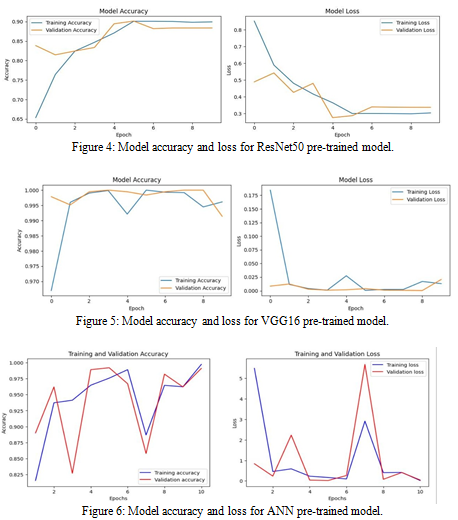

The results of the study underscore the strengths and weaknesses of the four evaluated models—VGG16, ResNet50, Sequential, and ANN—in handling multiclass crop classification. The performance metrics of each model reveal insights into their suitability for practical applications in smart agriculture.

A. Performance Comparison

The models demonstrated varying degrees of effectiveness in classifying crop images, with VGG16 and ResNet50 significantly outperforming Sequential and ANN models:

1) Artificial Neural Network (ANN)

- Achieved an accuracy of 83.2%, marking the lowest performance among the models.

- As a simpler feedforward network, ANN struggled with the complex features of crop images, leading to suboptimal classification outcomes.

2) Sequential Model

- Achieved a slightly higher accuracy of 85.6%.

- Despite its hierarchical design with convolutional and pooling layers, the model could not match the depth and feature extraction capabilities of more advanced architectures like VGG16 and ResNet50.

3) VGG16

- Delivered the highest accuracy of 97.8%, showcasing its exceptional ability to extract and learn from the intricate features in crop images.

- However, the model occasionally failed to predict the correct class for certain crop images, indicating limitations in handling edge cases.

4) ResNet50

- Achieved an accuracy of 89.3%, second to VGG16 but with more consistent performance across all crop classes.

- Its residual learning framework enabled the model to handle complex features effectively, making it a reliable choice for scenarios requiring balanced performance and resilience.

B. Model Strengths and Weaknesses

- ANN and Sequential: These models, while foundational, were less adept at handling the complexity of the dataset. Their lower accuracy suggests they may be more suitable for simpler classification tasks or as benchmarks for comparison.

- VGG16: This model excelled in accuracy, making it ideal for applications where precision is paramount. However, its occasional misclassifications highlight the need for supplementary strategies, such as ensemble methods, to enhance reliability.

- ResNet50: Despite lower accuracy compared to VGG16, ResNet50 demonstrated superior resilience, correctly classifying all input images without significant variation across classes. This consistency makes it a strong candidate for real-world applications where reliability is crucial.

C. Practical Implications

The findings emphasize the importance of selecting the right model based on the specific needs of the application. While VGG16 is preferable for tasks requiring high precision, ResNet50 is better suited for scenarios demanding consistent performance across diverse inputs. Sequential and ANN models, despite their lower performance, can serve as lightweight options in resource-constrained environments or when processing simpler datasets.

D. Visualization of Results

The comparison of performance metrics, including accuracy, precision, recall, and F1-score for each model, is depicted in the plot below. This visual representation provides a clear perspective on the relative strengths and weaknesses of the evaluated models, facilitating informed decision-making for future deployments in smart agriculture.

These results underscore the transformative potential of deep learning in agriculture. By carefully selecting models tailored to specific requirements, stakeholders can optimize crop classification, improve resource management, and foster sustainable farming practices. Future work will focus on exploring hybrid models and incorporating additional datasets to further enhance classification accuracy and reliability.

Figure 10: Query image to predict the image which is not in the dataset.

Conclusion

This study demonstrates the potential of deep learning models to revolutionize crop classification in smart agriculture, enabling better yield prediction and resource management. By evaluating VGG16, ResNet50, Sequential, and ANN models, we identified significant differences in their performance and suitability for multiclass crop classification. VGG16 achieved the highest accuracy (97.8%), showcasing its capability to handle intricate features of crop images. However, its occasional misclassifications underline the need for further refinement. ResNet50, with its consistent performance and resilience (89.3% accuracy), proved more reliable across diverse scenarios, making it a practical choice for real-world applications. In contrast, the Sequential and ANN models, with accuracies of 85.6% and 83.2%, respectively, demonstrated limited effectiveness, highlighting the necessity of more sophisticated architectures for complex tasks. These findings emphasize the importance of selecting models based on application-specific criteria, such as precision or consistency. Future research should focus on hybrid models that combine the strengths of VGG16 and ResNet50, as well as leveraging larger and more diverse datasets to improve generalization. This work underscores the transformative potential of deep learning in agriculture, paving the way for innovative, efficient, and sustainable farming practices.

References

[1] Sheeraz Arif, Rajesh Kumar, Shazia Abbasi, Khalid Mohammadani, and Kapeel Dev, “Weeds Detection and Classification using Convolutional Long-Short-Term Memory”( 2021),Volume 1,doi: https://doi.org/10.21203/rs.3.rs-219227/v1 [2] Linhui Wang, Yubin Lan, Xuejun Yue, Kangjie Ling, Zhenzhao Cen, Ziyao Cheng, Yongxin Liu, and Jian Wang, “Vision- based adaptive variable rate spraying approach for unmanned aerial vehicles”, International Journal of Agricultural and Biological Engineering(2019),Volume 12, doi:https://doi.org/10.25165/j.ijabe.20191203.4358 [3] V. Krishna Pratap, N. Suresh Kumar, “High-Precision Multiclass Classification of Chili Leaf Disease Through Customized EfficientNetB4 from Chili Leaf Images”, (2023),doi: https://doi.org/10.1016/j.atech.2023.100295 [4] Mona Tahmasebi and Mohammad Gohari, “\"An Autonomous Pesticide Sprayer Robot with a Color-based Vision System\", International Journal of Robotics and Control Systems(2022), Volume 2, doi: https://doi.org/10.31763/ijrcs.v2i1.480. [5] Krizhevsky, A., Sutskever, I., & Hinton, G. E. “ImageNet Classification with Deep Convolutional Neural Networks”. Advances in Neural Information Processing Systems (NeurIPS)(2012), 25, 1097-1105. [6] Karen Simonyan and Andrew Zisserman, “Very Deep Convolutional Networks for Large-Scale Image Recognition”, International Conference on Learning Representations, (2015). [7] Zhao, Y., Zhang, L., & Li, X “Deep Learning for Crop Classification: A Comparative Study of Convolutional Neural Networks”, IEEE Transactions on Geoscience and Remote Sensing, (2019). [8] Jeffrey Yosinski, Jason Clune, Yoshua Bengio, and Jeff Clune,” How Transferable Are Features in Deep Neural Networks?”, Advances in Neural Information Processing Systems 27, pages 3320-3328, https://doi.org/10.48550/arXiv.1411.1792 [9] Gao Huang, Zhuang Liu, Laurens van der Maaten, and Kilian Q. Weinberger,” Densely Connected Convolutional Networks”, IEEE Conference on Computer Vision and Pattern Recognition (CVPR) in 2017. [10] Li, X., Zhang, Y., Wu, Y., Xu, J., Liu, Y., Zhang, Y., & Liu, J.” Deep Learning for Plant Disease Detection and Classification: A Review”, IEEE Access, 2020. [11] Jüergen Chung, Caglar Gulcehre, Kyunghyun Cho, and Yoshua Bengio, “Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modelling”, NIPS (Neural Information Processing Systems) 2014. [12] Melwin D\'souza, Ananth Prabhu Gurpur, Varuna Kumara, “SANAS-Net: spatial attention neural architecture search for breast cancer detection”, IAES International Journal of Artificial Intelligence (IJ-AI), Vol. 13, No. 3, September 2024, pp. 3339-3349, ISSN: 2252-8938, DOI: http://doi.org/10.11591/ijai.v13.i3.pp3339-3349 [13] P. M. Manjunath, Gurucharan and M. DSouza Shwetha, \"IoT Based Agricultural Robot for Monitoring Plant Health and Environment\", Journal of Emerging Technologies and Innovative Research vol. 6, no. 2, pp. 551-554, Feb 2019 [14] Melwin D Souza, Ananth Prabhu G and Varuna Kumara, A Comprehensive Review on Advances in Deep Learning and Machine Learning for Early Breast Cancer Detection, International Journal of Advanced Research in Engineering and Technology (IJARET), 10 (5), 2019, pp 350-359.

Copyright

Copyright © 2024 Jagdip Singh, S. Muthukumar, N Jagadeesh, D. Bhadru, V. Manjula, K. Shanthi, V. Mallikarjuna Naik, C. Usha Nandhini. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET65630

Publish Date : 2024-11-28

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online